3 Oct 2025

•by Code Particle

•8 min read

The rise of AI in software development has been nothing short of revolutionary. Tools like GitHub Copilot, Windsurf, Cursor, and ChatGPT are making it possible to generate entire codebases in a fraction of the time.

For most startups, this is a welcome boost. But for healthcare organizations , it’s a very different story. When you’re handling protected health information (PHI) , these tools can pose serious compliance and security risks .

Let’s unpack why — and what safer alternatives look like.

Modern AI developer tools fall into two main buckets:

The common thread: they work by sending your prompts and code snippets to external servers for processing.

That’s fine if you’re building a weather app. It’s not fine if your codebase handles PHI .

👉 Learn how we help organizations build custom medical software with AI that meets compliance requirements.

Healthcare businesses are not just writing code, but also developing systems that can handle electronic protected health information (ePHI). Every architectural decision has regulatory ramifications because, in contrast to other industries, healthcare software development must strike a compromise between innovation and stringent compliance standards. PHI is susceptible to inspection as even code that doesn't directly include patient data might disclose how it is processed. As a result, security and compliance become essential components of every project rather than optional extras.

And here’s the kicker: none of today’s SaaS AI developer tools are HIPAA-compliant. Vendors like OpenAI, GitHub, or Windsurf won’t sign BAAs, and they often retain logs of prompts for debugging or abuse detection.

For official details, review the U.S. Department of Health & Human Services’ HIPAA guidelines.

Software development is made faster and easier with SaaS AI technologies, but there are hazards involved that healthcare firms cannot afford to ignore. The issue encompasses not only data privacy but also security, compliance, and even intellectual property. Exposure of sensitive code and PHI-related procedures to third-party servers may result in regulatory infractions, security breaches, and expensive legal issues. The most significant dangers that healthcare organizations encounter while using SaaS AI development tools are listed below.

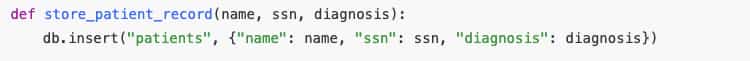

This looks harmless — no real patient data. But it reveals exactly how PHI is processed and stored . Under HIPAA, that makes it part of the regulated environment. Sending it to ChatGPT or Windsurf is a disclosure.

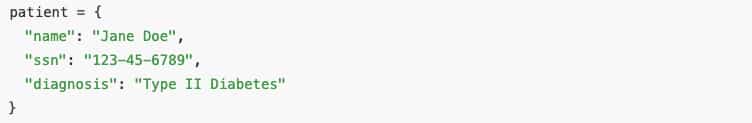

This one is obvious. It contains actual PHI . If a developer pastes this into ChatGPT, you’ve just triggered a reportable HIPAA breach — patient notifications, HHS filings, and possible press coverage.

On the surface, SaaS AI solutions may appear harmless when applied in a healthcare development setting. In the end, a lot of teams believe they are being safe if they aren't directly entering patient data into a prompt. Developers undervalue the hazards of sharing code that interacts with PHI in this mentality, which leads to a perilous gray area. Because both "real data" and the systems that handle it are equally protected by HIPAA, the issue is that regulators do not distinguish between the two.

You may hear arguments like:

Here’s the reality:

Just because SaaS AI tools aren’t safe for healthcare doesn’t mean organizations have to miss out on the benefits of AI altogether. The key is adopting solutions that provide the same speed and efficiency while keeping data under your control and in compliance with HIPAA. By choosing the right infrastructure and safeguards, healthcare teams can unlock AI-driven development without exposing PHI or risking regulatory violations. Below are some of the most effective and compliant alternatives available today.

Healthcare organizations can benefit from AI in development — but only if it’s done responsibly:

Generic SaaS AI developer tools may accelerate coding, but they are not safe for healthcare codebases . Until vendors provide self-hosted deployments, BAAs, and airtight audit logs, healthcare organizations must assume that using these tools on PHI-related systems is a compliance violation.

The good news? The future of AI in healthcare development is bright, but only if it’s built on secure, compliant foundations . At Code Particle, we believe healthcare organizations shouldn’t have to choose between innovation and compliance. That’s why we’re building AI developer tools designed for regulated industries; giving teams the speed of AI with the safety of self-hosted, compliant infrastructure.

If your organization is exploring AI in software development, now is the time to act. Learn more about our healthcare software development solutions or connect with us directly. We can help you deploy AI-Enhanced Development that is:

👉 Contact us to explore how AI can transform your healthcare software development — without compromising compliance.